Danilo Benzatti

Here is the product of the ideas described in this essay. The simulation program allows the user to create a general graph and set two nodes to be connected by this alternative graph search algorithm. A simple simulation will be detailed further.

The program is available for download here and its full source code is also provided. This can be useful for getting more implementation details that were not covered in the essay. Note that this code was written in a few days and it is not optimized for speed or anything else. The intention is just to illustrate the ideas we have been discussed in an uncomplicated way.

The main part of the window is where the graph must be created. The nest will be highlighted in blue and the food source in red. There is a panel showing the path with higher pheromone concentration at the current turn. It is very interesting to observe this path changing during the simulation until a good result emerges from the collective behavior of the agents. Another panel shows the current turn, the current number of agents in the population and the quantity of food collected. The stop criteria can be adjusted at another panel. The simulation can stop after reaching a maximum number of turns or a certain rate of food collected per turn.

An example of a possible simulation is described below.

|

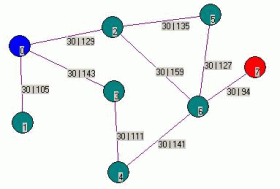

Initial State. The cities 0 and 7 indicate respectively the nest and the food source.

At each edge there is a number showing the pheromone intensity and the length of the route. "pheromone | length" |

|

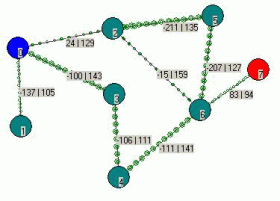

After 115 turns, considering the pheromone laid by the ants and the evaporation, the state of the graph is shown at this figure. The panel bellow shows the path with highest amount of pheromone:

|

|

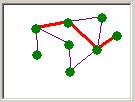

After 254 turns from the beginning the most used route has been changed. Since this path is shorter, the pheromone intensity tends to increase, attracting more and more ants as described before. The path shown in the panel is:

|

|

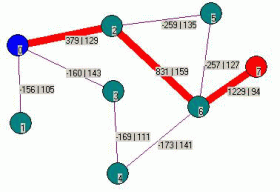

After 316 turns from the beginning, the system reaches the first stop criterion, and the result is then highlighted.

Note the pheromone intensity of the routes that compose the final result! |

Many examples were tested and the system provided satisfactory results. The performance has greatly increased after some improvements were added to this system. For example, an agent that is carrying food back to the nest leaves a much stronger pheromone trail over its routes. This allows a successful agent to exert more influence on the others.

For instructions on how to run simulations with this program, check the "readme.txt" file.

Since there is no explicit cost function related to the graph, this system could be used in applications that involves real-time unknown environments, for example robot exploration of unknown environments. Note that no prior knowledge is required because the effect of a cost function is a consequence of the length of routes that need more time to be crossed.

Many search algorithms rapidly increase the memory usage as the problem gets more complex. The agents that were used to simulate the ants have only a relative limited memory and thereby the system is not much affected by the complexity of the problem.

The size of the population is controlled by the natural selection. In larger environment, the agents will take more time before they get lost because there are more possible routes to be chosen. If they don't get lost, they won't be excluded of the population. This means the population will naturally grow in larger environments!

There is no central decision. The information is distributed among the agents and also the environment. If one of the agents is accidentally lost, the system will continue to work and the end result will not be affected. This property qualifies the system as robust.

The system is also adaptive. If during a simulation an edge of the graph is suddenly removed or even if a new one is created, the system can rapidly readapt to the modified environment and a new route will emerge.

Emerging Intelligence can also be used in Real Time Strategy games. The units can be designed to perform simple local actions in order to keep a low computational cost and the intelligence would emerge as a consequence of the self-organization of the colony of units. The Genetic Algorithms could be applied to select and evolve good units so as the player learns new strategies the game would becomes more intelligent too

Remember you can visit the Message Store to discuss this tutorial. Comments are always welcome!. There are already replies in the thread, why not join in?