Alex J. Champandard

For obvious reasons, when a human moves in a specific direction, he will most often be looking that way. Unless of course, the subject is male, and someone attractive of the opposite sex happens to walk past… many have lost lives that way, so it's probably best to focus in front of you. The part of the environment behind is remembered in short term memory, and is undoubtedly considered when sudden unplanned steps need to be made. We will not consider this possibility in this first tutorial on obstacle avoidance as it seems more of an advanced issue, which would only slightly improve the general behaviour. A later issue will cover this topic.

So what aspect of the information available is taken into account when executing reactive obstacle avoidance? Certainly the sense of distance between the current position, and the obstacles in front needs to be portrayed. If I was to control a robot around an unknown maze and forced to move forward at a steady pace, when immediately faced with walls or obstacles, I would turn the robot to face a more promising direction. This would allow me to avoid a collision in the short term, but also give me the most time to anticipate other obstructions.

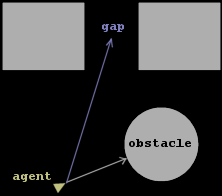

Figure 4: Example situation showing three obstacles, with the circle being closest. The arrows represent distances from the agent. A gap is present between the rectangles, identified by a longer distance.

This leads to the question of how to chose the new direction when an obstacle needs to be avoided. Conceptually, one would visually pick out a few openings, and select the most promising. This notion of opening, or gap is difficult to express abstractly in order to transmit it to the computer AI. Indeed, it is challenging to determine the properties of a gap (how wide, how far…) let alone pick one out from the environment without using too much computation. An elegant and practical solution to this problem is to express the gap as a distance too. So an opening would be represented as a longer distance from the position of the bot, and an obstacle would be represented as a shorter distance. This approach has many advantages; not only is it much easier to extract this kind of information from the environment, but it also allows uncertainty to be expressed - distances that are neither short or long that cannot be classed as obstacle or gap can just be reported as such, and interpreted by the obstacle avoidance module according to the current situation.

Given these distances, the AI agent can decide which direction to head in. Intuitively, one would expect it to try to maximise the distance in front, which seems like the simplest option and the most promising. We won't, however, require this behaviour from our obstacle avoidance module, as we'll allow it the freedom of learning the best possible approach.

We've ascertained that the A.I. agent is presented with a assortment of distances, representing either gaps, obstacles or objects that are considered in between. These distances need to be presented to the obstacle avoidance module, and should be ready on demand.

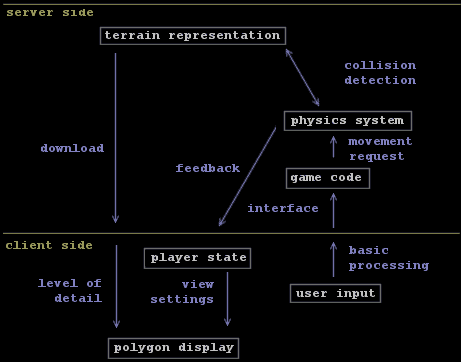

Firstly we'd like our algorithm to be as flexible as possible, allowing it to be easily inserted into brand new games. This implies relying entirely on the game interface designed, and resisting the temptation of diving into the back-end. Although the important information could fairly simply be directly extracted from the BSP (assuming you have a bit of knowledge in 3D data-structures and vector math), it would be fairly short sighted to do so. Not only would the system be dependant on pre-processing and lose flexibility, but it would also need redesigning for future back-end modifications.

Figure 5: Diagram revealing the structure of the game engine, emphasizing the fact that the interface should be used in preference to any other approach.

Using the game interface also has the advantage of being able to take the physics into account. The existing collision detection algorithm will be reused, saving us costly development time. We will also be able to handle other players and entities during the obstacle avoidance. And finally, our navigation decisions will be based on fully accurate physical data.

Even if we use the game interface, this will need to be done in real-time. An efficient approach is therefore needed. We'd also like to exploit the full extent of the 3D environment, by not restricting it to a grid. The simplicity of a grid based approach does have many advantages from a higher-level point of view. This will be considered for the deliberative path-planning module.

In the previous sub-section, we mentioned the use of distances to base our navigation upon. For mobile robots, this information is traditionally based on sensors. A laser or infra-red detector is bounced off the nearest wall, and a sense of proximity can be extracted thereby. An implication of this method is that the robots can't handle non-flat environments. Stairs often appear as walls. This often does not matter for robots, as they are not capable of climbing stairs anyway. Our bots, however, would be seriously limited without the ability to climb stairs or ramps, so simple distance sensors will not suffice.

In essence, what we are interested in is the furthest possible walking distance given a particular heading. Namely, how far can the bot move forward without encountering trouble (lava pit, wall or even a non solid obstacle such as a fire)? Our sensors should be able of extracting this information, given the desired walking angle. The obstacle avoidance will benefit from being given such generic information, as it will handle the 3D environment transparently as a simple 2D navigation problem.

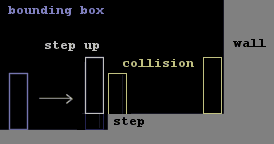

Figure 6: Virtual simulation of the walk. The first collision causes the bounding box to be raised, and the iteration to continue. When the wall is hit, the raised box still collides and the simulation stops.

The furthest possible walking distance can only be acquired by virtually simulating the walk itself. A na´ve approach (complexity O(n)) would trace the bounding box of the bot step by step, dropping it down to the floor each time (to simulate walking down stairs). If an obstruction is encountered, a step up is taken, and the walk can continue. If two obstructions are encountered successively, it must be an obstacle that can't be stepped over, and the simulation stops. The advantage with this approach lies in our ability to determine if the path can be walked backwards. This is of no immediate use, but it will come in handy for the automatic waypoint laying algorithm.

This loop can be highly optimised using a divide and conquer approach. A few assumptions about the path need to be made though. If all the ledges can be jumped over and the rest of the level is just ramps, stairs, walls and floors, we can reduce the complexity of the algorithm to O(log n) in the average case, although it'll mostly be O(1) and sometimes O(n). The trick is to trace the bounding box from the current position to a distant point in the desired direction. When an obstacle is hit, the current position is moved to there, and a step up is taken. When the current position has remained the same for two iterations, the final point is found.

Similar types of simulation can determine the furthest possible crawling or swimming distance for example. At a pinch, all this could be merged into the same function. The obstacle avoidance would then blindly take the distances as inputs, and specify the desired mode of movement

Remember you can visit the Message Store to discuss this tutorial. Comments are always welcome!. There are already replies in the thread, why not join in?