Bot Navigation: Advanced Obstacle Avoidance

By ai-depot | June 30, 2002

Genetic Algorithms

Background

I’ve decided it’s probably best not to go into much theoretical detail about genetic algorithms here, as it would distract from the practical issued involved. Not only that, but we are discussing obstacle avoidance and motion control here, and too much detail would not be welcome. There will be a future feature on GAs in the short term future, so stay tuned. In the mean time, a good place to brush up on GA theory is the Genetic Algorithms Warehouse. You should get a feel for evolutionary solutions there before reading practical discussions here.

Genetic algorithms are based on Darwin’s theory of evolution, driven by the survival of the fittest. An entire population of individuals is created, and each evaluated at a given task and assigned a fitness value based on that. The genetic algorithm can then create new individuals based on the current population: it generally selects fitter individuals, and breeds them together. This can sometimes provide a big improvement of the solution, but generally it’s just a small adjustment. Performance increases greatly as generation after generation of individuals are created.

This process can be broken down into distincts components:

- Selection Scheme - Picks the individuals which will be allowed to mate and produce offspring.

- Breeding - The process of combining to individuals together to create a new offspring.

- Fitness Normalisation - Based on the raw result of the evaluation process discussed in the previous page, we can apply modifications to it for the genetic algorithm to perform better.

- Replacement Scheme - We then decide how the new individuals are to be re-inserted into the population, if at all.

Each have their impact on the overall performance in their own special way. We’ll go into the most important concepts now.

Selection and Replacement Scheme

There are two things to keep in mind during the selection of the parents, elitism and diversity. Basically, we want to pick the best bots, but not always the same ones.

Elitism is the process of selecting the better individuals, or more to the point, selecting individual with a bias towards the better ones. Elitism is important since it allows the solutions to get better over time. If you pick only the few best parents, and replace always the worst, the population will converge quicker… this means all the individuals will more or less all be the same. Contrariliy to general belief, the solution will not necessarly be optimal. On the contrary, I can pretty much guarantee it will be sub-optimal, or if you’re lucky, near-optimal. This approach is fine for small problems, and small population sizes.

For bigger problems, you need more diversity. This allows the genetic algorithm to

search through a wider variety of solutions, and thereby not get stuck in local maxima (solutions that seem good locally, but are not in the grand scheme of things). You can do this by adding a random parameter to the elitist selection scheme. For example, use a tournament selection scheme - which involves comparing random numbers scaled by the magnitide of the fitness.

Breeding

Figure 4: Example of converting two bots to chromosomes (or - in scientific terms - from phenotype to genotype), and breeding them together to obtain the child bot.

As you might expect, there are various ways of producing offspring. They usually involve representing the parent solutions as two big chromosomes (or rather, genotype since there’s only, and it’s non-human), and merging them together. One approach, called binary crossover picks two split points, and copies the father chromosome before and after the splits, and the mother chromosome after. This approach has a few problems, especially its dependency on the order of the genes in the genotype. A solution is the uniform crossover, which selects genes randomly (60% probability) from one or the other parent. After this, a mutation process is usually applied. This is to randomise the solution slightly by changing some genes (0.1% probability).

Search Space Properties

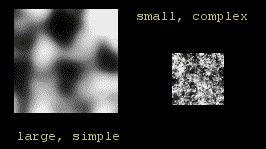

Figure 5: Example of different search spaces in 2D, a stereotypical example of small and complex search spaces.

The search space is the set of all possible solutions. Genetic algorithms generally perform well in large search spaces. However, complexity is a very important factor. When the fitness function is complex, and non-continuous, the genetic algorithm struggles. Then, if you combine large search spaces, you’re asking for trouble! GA are no miracle solution, though they do tend to do very well compared to other solutions. It’s therefore a good idea to keep the search space simple and small as much as possible!

Tags: none

Category: tutorial |