Creating Self-Aware Intelligence

By ai-depot | March 31, 2003

![]() In this article, the necessity of atheistic thinking is proposed as an element towards creating self-awareness. Notions are introduced for solving problems using multiple ANNs combined to form a single brain, with a practical example using a visual system.

In this article, the necessity of atheistic thinking is proposed as an element towards creating self-awareness. Notions are introduced for solving problems using multiple ANNs combined to form a single brain, with a practical example using a visual system.

Written by Clint O'Dell.

Memories & Free Will

An Introduction

The different parts of the brain are specialists in particular tasks which connect to other specialized parts to create emergent properties, some of which are responsible for our conscious self-awareness. The first step in reproducing this emergent property of consciousness is to admit there is no soul. There is no one specific area a person can pin point and say, �this is you.� Instead we must realize and acknowledge that consciousness is merely the exchange of information between brain functions and nothing spiritual nor mystical takes place.

Once the aspiring AI researcher understands that the mind is caused by pure physical properties he can now begin learning how the mind is distributed. By understanding the distributed nature of the brain we can better duplicate the results. Second, understand that while the distribution method my be hardware based its underline result is software focused. This means the distribution creates a pattern. That pattern it creates is our identity, the �me� or �I� as we understand the term in common, everyday language. This means it does not matter what hardware created that pattern so long as the pattern exists. When the pattern stops existing (perhaps because the hardware stops distributing) the entity (such as �me� or �I�) stops existing as well.

The brain creates patterns through neurons, but it is not enough to just have neurons. That would be like saying all life is just molecules, when actually it�s the way molecules interact that result in life. Just like molecules, neurons need to be assembled in an organized fashion to be useful. Mimicking neurons is not the only way to create the needed patterns but in my opinion may be the most efficient method for speedy AI development using what we know today. Today�s software neuron models may be crude compared to nature�s neurons; but again, I have to stress it isn�t the method that creates the pattern that�s important, it�s the pattern itself that is important.

Finally, the third bit of understanding every AI researcher needs is the understanding that it isn�t enough just to have the pattern for a conscious being to be meaningful. The pattern will need a way to communicate with the outside world to be truly conscious & self-aware. That includes providing sufficient inputs & outputs.

We Are Our Memories

Notice that we have different types of thought. Sometimes we think in pictures, or, to explain more thoroughly, we see our memories. We also think in words. English in my case. Other thoughts we have are remembering tastes, sounds, textures, situations, etc.. Going a step further, because English is a spoken language it must be recognized through the part of the brain that interprets sound. Since English is also a symbolic language when written, it must be recognized through the part of the brain that interprets images. When we see a character in the alphabet, the vision processing part of our brain compares the lines of the letter to it�s �iconic memory� database to see if it recognizes that character. The sound processing part or our brain has the sound of that character written in its own �echoic memory� database. These two databases pass the recognized memory object from each function to a third function that compares the two memories in its own database, checking for a match. If a match is found then it declares to the next function that the two objects are related.

Points to remember are:

- Our various brain functions exchange information in the form of memory.

- Each function is specialized in what it does, such as vision recognition versus sound recognition.

- Every function maintains its own database of memories. Memories are not stored in a central location.

Free Will

When an entity changes a situation it is taking control of that situation. When it changes its own program it takes control of that program. Even if the program is programmed to change itself, it is still taking control. Further, if the entity recognizes it is the one doing the controlling, contains non-hardwired output abilities linked to its thoughts, and its pre-programmed hardware movements are terminated or weakened then it will become free to choose what it does next. What it chooses to do will come from any desires that have been hard coded into the system. In humans this desire comes from our emotions which are genetically hardwired. The emotional triggers in humans can change through associations. In machines, determinism/emotional triggers will also work through associations.

Free will is limited, however, since it can only choose within conditions bound by its input and is further weakened by finite output possibilities. Because simultaneous infinite inputs (as well as outputs) are impossible, due to the nature of infinity, it is equally impossible to have a 100% range of free choice. Choices can only be made as long as they�re recognized & physical connections make it possible. Thus remember, free will entities have a full range of free choices within the boundaries.

Also note, it is possible to increase one�s range of free will through education (i.e. increasing one�s awareness of situations), association training (i.e. experience), and physically altering its inputs, outputs, & neural layer complexity (i.e. updating the physical distribution mechanisms or otherwise reprogramming the pattern generator).

Neural Networks

I once read in an article that neural networks are not the best solution for every problem. I can agree with that but then the author goes onto say that there are some problems that neural networks simply can not solve. Here is where I have to disagree. Because neural networks are modeled after the brain, they should be able to solve any problem our own brains can solve. The author probably thought this isn�t the case because he may have been thinking only about simple NNs (neural networks) that take in a set number of inputs which travel through a mass number of hidden layers before coming up with a prediction for the outputs. The problem is all the data is thrown together into one large NN and thus the entire network has a ton of unknown variables it must figure out in which way they�re associated. A better solution is to break down a large NN into many smaller NNs which specialize in particular tasks.

Before I get to far in the philosophical aspects into designing a conscious being, it would probably help if I explained a bit about neural networks. What are they and how do they work?

First, we have to think about the goal of a neural network. What is its goal? Well basically, the goal is to recognize patterns and demonstrate that it has done this by making predictions of what will happen next. Neural networks are very useful in recognizing patterns. The reason lies behind weighted connections between the neurons. Read on and I�ll explain a bit of the detail on how this is accomplished. If you would like to learn more about neural networks, I have compiled a list of sites at the end of this article I think are worth while in helping to teach the subject of artificial intelligence and especially neural networks.

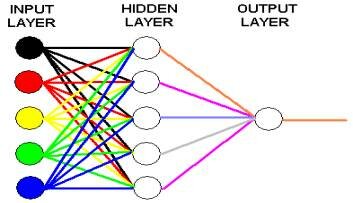

A neural network is made up of 3 main parts, an input layer, a middle or hidden layer, and an output layer. The input layer is connected to every single neuron in the hidden layer which in turn every neuron is connected to each neuron in the 2nd hidden layer, if there is one, and so on until each of the neurons in the last hidden layer connects to the output layer.

Each neuron connection is assigned a weight value. As each input passes it�s signal to the next neuron, the weighted connections of that neuron are totaled. When a certain value is reached, called the threshold, the neuron fires. Neurons can take in a wide variety of input values but only fires a single value. The output also fires only a single answer thus acting as a neuron as well. The answer is compared with what the result should be and if the layer is incorrect it makes calculative, and many times random, changes in a few weighted values and �tries again�. It keeps doing this until the output is correct. The end result is an impression left within a pattern of weighted values so that any input will travel along the same pattern resulting in the same behavior. This behavior is called �learned behavior�.

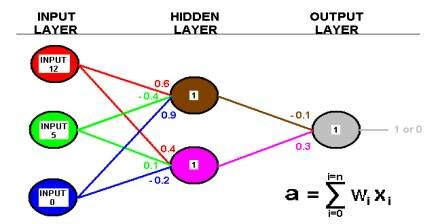

It sounds simple enough but in my opinion an example makes all the difference so check out the diagram below as we calculate the activate values for each neuron. There are 3 inputs, 2 neurons in a single hidden layer, and one output neuron making up the output layer. The activate value determines whether or not the neuron will fire, so the first thing we must do is calculate the activate value for each neuron. To do this we use the formula A = W1 * X1 + W2 * X2 + W3 * X3 � + Wn * Xn. Where A is activate, W is the weight value, and X is the input value. The number in the middle of each neuron is that neuron�s threshold value. In order for the neuron to fire, its activate value must be greater than or equal to its threshold value.

Ok. Let�s get started. Take the value of the first input, which is 12, and multiply it by the weighted connection to the first neuron, which is 0.6. Now take the value of the second input, which is 5, and multiply it by its weighted connection to the first neuron, which is �0.4. Finally take the value of the last input and multiply it by it�s first weighted connection. You should have 7.2, -2, 0. Add these numbers up and you have the activate value for the first neuron. 7.2 + -2 + 0 = 5.2. Since 5.2 is greater than that neuron�s threshold value of 1, it will fire.

Next, let�s calculate the activate value for the second neuron. Take the input value of the first input and multiply it by its weighted connection to the second neuron this time. Do the same for the second and third inputs then sum the numbers together to get the activate value. You should have (12 * 0.4) + (5 * 0.1) + (0 * -0.2) = 5.3. Since 5.3 is greater than the second neuron�s threshold of 1 then it will also fire.

After all the activate values have been calculated for the first level of hidden neurons, the second, third, fourth, and so on layers are calculated all the way through the output layer. Since in this example there is only 1 hidden layer, which we have already calculated, leaving just a single neuron making up the output layer, we will go ahead and calculate it. Remember that both neurons fire, also let me point out that this particular neural net is binary meaning that when it fires, the value it passes on is 1 and when it doesn�t fire the value it passes on is 0. So the first thing we do is take the value passed on by the first neuron, which is 1, and multiply it by its weighted connection to the output neuron, which is �0.1. We do the same thing for the second neuron and its weighted connection then add the two numbers together in order to find out what the output neuron�s activate value is. (1 * -0.1) + (1 * 0.3) = 0.2. Since the activate value is less than the threshold value this neuron will not fire; and thus, the output value for the neural net with these inputs is 0.

Notice though that it is impossible for this output neuron to ever fire. The reason is it�s weights are so low that no matter what combination of firings the two connected neurons have, it will never be equal to or greater than the threshold. A neural net that can have only one value won�t be very useful so we need to evolve it a bit to allow for greater possible outcomes. There are a few ways to do this. One way is by adding more neurons in the hidden layer. The more connections there are the more additions made to the activate value and the more likely the chance of the neuron firing. Another way is to change the threshold value of the neuron. The lower the threshold value the lower the activate number has to be for the neuron to fire. And yet another way to allow for both firing states is to change the weighted values of each connection until both states are possible outcomes. There are many ways to figuring out how to evolve the weights, yet one of the most common methods is the back-propagation algorithm. See this website for details.

Pages: 1 2

Tags: none

Category: essay |