Creating Self-Aware Intelligence

By ai-depot | March 31, 2003

Multiple Neural Networks

Visual Object Recognition

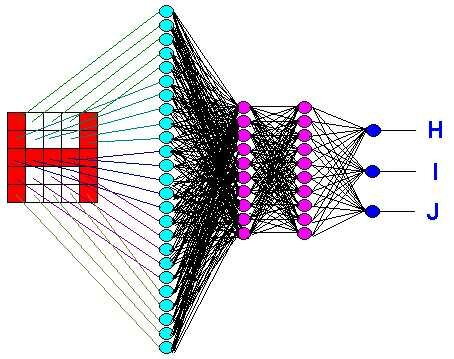

At this point you may be wondering how we can apply what we�ve just learned about neural networks to practical applications, so let�s take a look at visual object recognition. The most common form of visual recognition is character recognition. Just exactly how does a neural net recognize the letter �H� for instance? Well this is relatively simple actually. First take a look at the following diagram to get a quick glimpse of the overall concept.

In this example there is a 5 by 5 grid, a 25 pixel resolution eye if you will, fed into the input layer. Each pixel has 2 possible states, on or off (1 or 0). Most practical applications will have a much higher resolution but this should suffice for our tutorial. The 25 inputs are then fed into the hidden layers. The number of neurons per layer, and in fact the number of layers used, is not based on any presently known mathematical formula. It�s completely up to you as the programmer on how many layers to use initially so you�ll have to experiment a bit to get a feel for the number of neurons and amount of hidden layers to use in each neural net you design. Also, there can be more than one output. In this example I want only the H neuron to fire when the neural net recognizes an H, the I to fire when an I is displayed, and so on. So, if any output neuron fires other than the correct one, all we do is adjust the weights until we get the correct output every time. Here there are only 3 possible outcomes but you can have as many outputs as you like to include the entire alphabet and any other objects you want the neural net to recognize.

The hardest part when it comes to these networks is the training of them. In the real world the characters won�t be clear as day like the H is in the above diagram. The character may be altered a bit (for those of us with less than perfect handwriting) and there might be other pixels lit up that don�t have anything to do with the character. This is called noise. When training your own NN (neural network) make sure that once it learns how to recognize a perfect example, start altering the examples and throw in some noise until the NN recognizes the pattern in every circumstance.

This method of object recognition is useful for all sorts of applications, as it�s not limited to only character recognition. Imagine teaching a neural net to recognize various colors by having it�s outputs be linked to various color possibilities, or even a mixture of colors by allowing more than 1 outputs to be fired at the same time. We can even take this same technique for visual object recognition and apply it to audio recognition too. Instead of each input being attached to a pixel, it�s attached to a frequency instead. Sit back and think up some more uses for neural networks. I�m sure you can come up with a dozen just off the top of your head. Again, for more information on the intricate details of the fascinating aspects of NNs, see the end of the article for a list of recommended websites.

Brains With Multiple Neural Networks

At the beginning of the article I suggested that any problem that can be solved by can also be solved by artificial neural networks if we break them down into many smaller NNs which specialize in their own particular tasks. This is crucial to solving complex real world problems such as creating a self-driven car, and allowing machines to become self-aware.

Think about how we recognize the world visually for a moment. Look around the room and notice how many objects you recognize in an instance without even trying. A machine with today�s technology can easily accomplish this same recognition capability using parallel neural networks and the right training. Just like a new born baby, a new born NN must be trained to recognize and respond to certain kinds of events.

Moving on to a specific scenario, imagine you�re given the task of designing an artificial NN capable of not only recognizing objects but also can tell you where the coordinates of those objects are. There are a couple of ways to accomplish this. One is to create a neural network whereby the outputs are: a starting x coordinate, a starting y coordinate, an ending x coordinate, an ending y coordinate, and a list of possible objects.

This solution will work as long we don�t have to add anymore objects to be recognized. If we have to add another object then the mere adding of an additional middle layer neuron or output neuron will require the updating of all the weights. This kind of neural net will take a lot of training too, so this is not the best solution to solve this problem.

A better solution is to create multiple neural networks which work together. Have a single network for each object that is to be recognized. That way the list of objects can easily be added to and categorized without having to retrain a single large NN every time we add a new object.

Also have a separate NN for coordinate recognition. This can be as simple as have the variables starting x, starting y, ending x, ending y for the output layer or as elaborate as copying over pixel by pixel the image to be located to a blank screen. At this point, we can see that when there are multiple objects to be recognized and located, each object is copied over to its own special blank screen I�ll call �iconic memory�. From here we can be even more elaborate by feeding in this blank screen containing a single object into a time recognition NN that recognizes patterns the object makes over time. So now at this point, if there are many objects on the screen, there are several time recognition processes going on in parallel. There is only one destination identification NN but it spans multiple copies of itself as it identifies the location of each of the objects. There is also only one time pattern NN which also spans multiple copies of itself. All the copies of the time NNs then combine into a higher level NN that places all the objects together on another blank screen (another part of iconic memory), and the recursive process continues.

By having multiple neural networks specialize in certain tasks they are more efficient at solving problems, plus they allow higher level networks to look at the �big picture� without worrying about all the little details, such as how to walk without falling over. For example, say that we have a robot on the first floor and there is an object it needs on the 2nd floor. There is a ramp connecting the two floors. We don�t necessarily have to actively think about how to get up the ramp in order to get the object we need once we know how to do it. We just walk up the ramp, leaving our mind free to think of other things.

That is also how we solve this problem using an android. Once we train an NN the concept of up and down and another NN the concept of what a ramp is and what its uses are, then all a higher level NN has to do is concentrate on getting the object on the 2nd floor without worrying about all the intricate details the lower layers of its mind do.

Without needing to worry about what lower level neural networks do, the higher level NNs, that the lower levels are connected to, are free to seek out other patterns. When it wants to walk down the hall, it just does it by telling the lower levels to walk down the hall and each lower level NN begins doing its task all the way down to the very basic levels. Because the mind is made of many neural networks working together, as opposed to a single massive neural network, a hierarchy of minds begin to form. The most top layer mind is our self-awareness. It is an emergent property which occurs when higher level minds (the top neural networks) begin to reflect on the patterns of its own body and mind.

We�re very close to accomplishing this right now. The concept is not difficult but we won�t see any results as early as tomorrow because the hardest part of the most tedious part of the whole process; the training. The training takes a long-time to do, but once a neural network has learned to be an expert in its task, we can then train other neural networks to do their tasks and sew these independent minds together into a 3rd party NN so that even higher level learning can occur.

I believe that in the next 20 to 30 years, we will see self-aware machines begin to immerge. We will create in a few decades what took mother nature billions of years to accomplish.

Recommended Reading

While most of the philosophical workings of this writing has come from observations of my own mind over the course of ten years of thought, many books have greatly contributed to the shaping or strengthening of my ideas. In no particular order a few are listed here:

- Matter and Consciousness Revised Edition by Paul M. Churchland

- Theory of Motivation Second Edition by Robert C. Bolles

- Brain, Mind, and Behavior by Floyd E. Bloom, Arlyne Lazerson, & Laura Hofstadter

- Mapping the Mind by Rita Carter

- Society of Mind by Marvin Minsky

Recommended Websites

There are many great websites which explain many different aspects of artificial intelligence and neural networks. I have listed a few of my recommendations here:

http://ai-depot.com

http://www.generation5.org

http://www.ai-junkie.com

http://ei.cs.vt.edu/~history/Perceptrons.Estebon.html

http://www.shef.ac.uk/psychology/gurney/notes/l5/l5.html

Written by Clint O'Dell.

Pages: 1 2

Tags: none

Category: essay |