Emergent Intelligence

By ai-depot | August 16, 2002

Ants Colony and Multi-Agents

Ants Colony and Multi-Agents

Individual ants are simple insects with limited memory and capable of performing simple actions. However, an ant colony expresses a complex collective behavior providing intelligent solutions to problems such as carrying large items, forming bridges and finding the shortest routes from the nest to a food source.

A single ant has no global knowledge about the task it is performing. The ant’s actions are based on local decisions and are usually unpredictable. The intelligent behavior naturally emerges as a consequence of the self-organization and indirect communication between the ants. This is what is usually called Emergent Behavior or Emergent Intelligence.

There are many other examples of Emergent Behavior in nature such as colonies of bacteria, bees and so on. There are even theories that explain the human conscience as an Emerging Behavior from the interactions and self-organization of the simple individual neurons! But this we can discuss in another opportunity.

The fascinating behavior of ants has been inspiring researches to create new approaches based on some of the abilities of the ants’ colonies. Some of the existing applications include the Traveling Salesman Problem, graph coloring, logistics and a lot more.

The practical example covered in this essay involves finding a path linking two nodes in a graph. In order to solve this problem, two characteristics of ants’ colonies will be particularly useful:

- their ability to find the shortest route between the nest and a food source, which will be used to find and optimize a path in the graph;

- the simplicity of each individual ant, which will make it easy for us to model the ant colony as a Multi-Agent System;

Foraging behavior of ants

First, let’s understand the foraging behavior of ants and how they can manage to find the shortest path between the nest and a food source using simple local decisions.

Ants use a signaling communication system based on the deposition of pheromone over the path it follows, marking a trail. Pheromone is a hormone produced by ants that establishes a sort of indirect communication among them. Basically, an isolated ant moves at random, but when it finds a pheromone trail there is a high probability that this ant will decide to follow the trail.

An ant foraging for food lay down pheromone over its route. When this ant finds a food source, it returns to the nest reinforcing its trail. Other ants in the proximities are attracted by this substance and have greater probability to start following this trail and thereby laying more pheromone on it. This process works as a positive feedback loop system because the higher the intensity of the pheromone over a trail, the higher the probability of an ant start traveling through it.

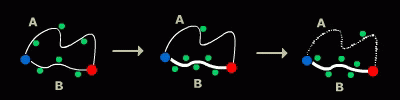

In order to understand how this process leads the colony to optimize a route, let’s take a look at the following example:

Suppose some ants were randomly searching for food when they found two different routes between the nest and the source. Since the route B is shorter, the ants on this path will complete the travel more times and thereby lay more pheromone over it.

As the process continues, the pheromone concentration on trail B will increase at a higher rate than on A. And soon, even those ants on the route A will choose to follow the trail B.

Since most ants are no longer traveling through route A and also due to the volatile characteristic of the pheromone, the trail A will start evaporating and soon just the shortest route will remain. That’s the trick!

Modeling the Ants Colony as a Multi-Agent System

This section will explain how to design a multi-agent system in order to imitate the collective behavior of the ants.

Environment

Considering the graph search proposed and the foraging behavior of ants, it’s easy to identify the similarity between these two problems. While ants try to find the best route between two places within an environment, a graph search algorithm tries to find the best path connecting two nodes within a graph. The main idea of the system proposed in this article is simply to put ants walking on a graph and observe the intensity of pheromone on its edges in time.

Think of graph’s nodes as different places where ants could stop during a travel and let’s call them cities. The edges of the graph will, of course, represent the routes connecting cities. This virtual environment will be populated by Agents representing individual ants.

In the AI domain, an Agent might be considered as an autonomous entity that interacts within an environment. Agents have only a dynamic partial representation of its environment that can be changed by their sensing abilities. They can also perform actions based on local perceptions and on their internal representation of the environment. These actions can affect the environment, the agent itself or even other agents.

After taking a detailed look at the description of the foraging behavior of ants with the Agents concept in mind, it should not be difficult to identify the relevant characteristics needed by an agent in order to simulate the ants behavior.

The agents will need to walk on a graph from a city to another. They will also need to pick food when they get to the food source and leave food when getting back to the nest. When traveling through a route, the agents must be able to put pheromone on it. Finally, the agents must be able to decide which will be the next path to follow based on the pheromone intensity over the available paths. These are basically the actions an agent could perform depending on its current state.

The following table describes the actions an agent can perform depending on each state.

| Local | Action |

| In a city | Choose next route and put pheromone on it |

| On a route | Walk one more step |

| In nest carrying food | Leave Food |

| In the food source | Pick Food and return to the nest |

An agent on a route will walk a step at each turn until it arrives in a city. Once the agent gets there it will choose another route based on the intensity of the pheromone over the available paths. The agent will repeat this process until it finds the food source, where it will pick food and then start the returning travel following its own pheromone trail. Back again in the nest the agent will leave the food and then start the whole process again. Note that all these actions require only local information and a short memory allowing the agent to recognize its own trail back to the nest.

Implementation

An easy way to develop a multi-agent simulation environment is using a turn based system. Think of it as a game where at each turn all players make one single move. This is exactly how the simulation will work. Each agent will perform just one of the actions described above at each turn.

All the elements of the environment were modeled using C++ classes. The Ant class represents the agents, the Route represents the edges of the graph, the City represents the nodes and the Civilization represents the environment.

Since these elements are simple, the implementation becomes simple. Many properties and methods of the classes are specific for the interface or just auxiliaries. To keep things didactic, just the essential aspects will be covered here. Check the source code for more details.

The City is the simplest entity. It basically knows its (X, Y) position in the environment. This will be necessary to calculate the distance between two cities.

The Route needs two pointers to City objects in order to identify the cities it is connecting. Another property is the length of the route. The longer the route, the more turns an agent will need to cross it. The pheromone intensity over a route is also represented in this class.

Another important thing that must be simulated is the volatile characteristic of the pheromone. The route will also need a method to simulate its evaporation.

class Route { private: float Length; // Length of the road int Pheromone; // Pheromone intensity over the road City *FirstCity; // Cities connected by this road City *SecondCity; public: void EvaporatePheromone(); // Simulate the evaporation of the pheromone // constructors, interface and auxiliary methods and properties omitted: // . . . (check full source code for details) };

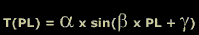

The most important characteristic of an ant in this context is related to its individual and unpredictable tendency to choose a certain route among the many available. Each instance of the class Ant must represent an individual agent with singular characteristics. This can be implemented by using a mathematical function. As described above the pheromone level over a route is measured by an integer number. The agent will use a method that evaluates its tendency of choosing a route based on the pheromone intensity. A good variability of the behavior of the agents can be expressed as a sinusoidal function with at least three coefficients:

The input PL is the pheromone level over a route. Alfa, Beta and Gamma will be properties of the Ant class initialized as random float numbers within the interval [-5..5]. These properties will make possible to have different individuals in the population. They are also needed by the Genetic Algorithms that will be covered further.

class Ant

{

private:

float alfa; // Indicates the Ant’s pheromone sensibility

float beta;

float gamma;

bool HaveFood; // Indicates if the Ant is carrying food

public:

float GetTendency(int PheroLevel);// tendency of choosing a route

void PickFood(); // Pick food when in the food source

void LeaveFood(); // Leave food when in the nest

void PutPheromone(); // Increase pheromone level of route

void Walk(); // Walk one more step

// constructors, interface and auxiliary methods & properties omitted

// . . . (check full source code for details)

};

The Civilization is the class that will control the whole environment and the simulation process. It will be also responsible for the evolution process. A graph must be created by the user through the interface of the simulation program. Two nodes of the graph must be specified as the nest and the food source. When the simulation begins, a random number of agents are created in the nest. At each turn the agents will perform actions depending on their current position as explained before. As the simulation runs the most used routes will have their pheromone level increased and after some time a solution will emerge from the collective behavior of these virtual ants.

class Civilization

{

private:

City *FoodSourceCity; // The Civilization’s Food Source City

City *Nest; // The Civilization’s Nest

TList *Routes; // All Routes in the Environment

TList *Cities; // All Cities in the Environment

TList *Ants; // All Ants in the Environment

int NaturalSelection; // Turns remaining before the next natual selection

public:

void NextTurn(); // Perform one turn of the simulation

// constructors, interface and auxiliary methods and properties omitted:

// . . . (check full source code for details)

};

Although this system can provide good results, a random number of agents with random characteristics may not always solve a given problem. Also, a population of agents that is able to find the shortest path in a graph may not be able to find a solution in a complete different environment. So, how to find out the best agents for a given problem? How many agents are necessary to perform a search in graphs with different complexities? How to balance agents with different characteristics to compose a good population?

All these questions point to Genetic Algorithms which will be discussed in the next section.

Tags: none

Category: tutorial |